Dispatch is a new type of post focused on work in progress—brief vignettes that highlight methods, artifacts, and analysis from the field.

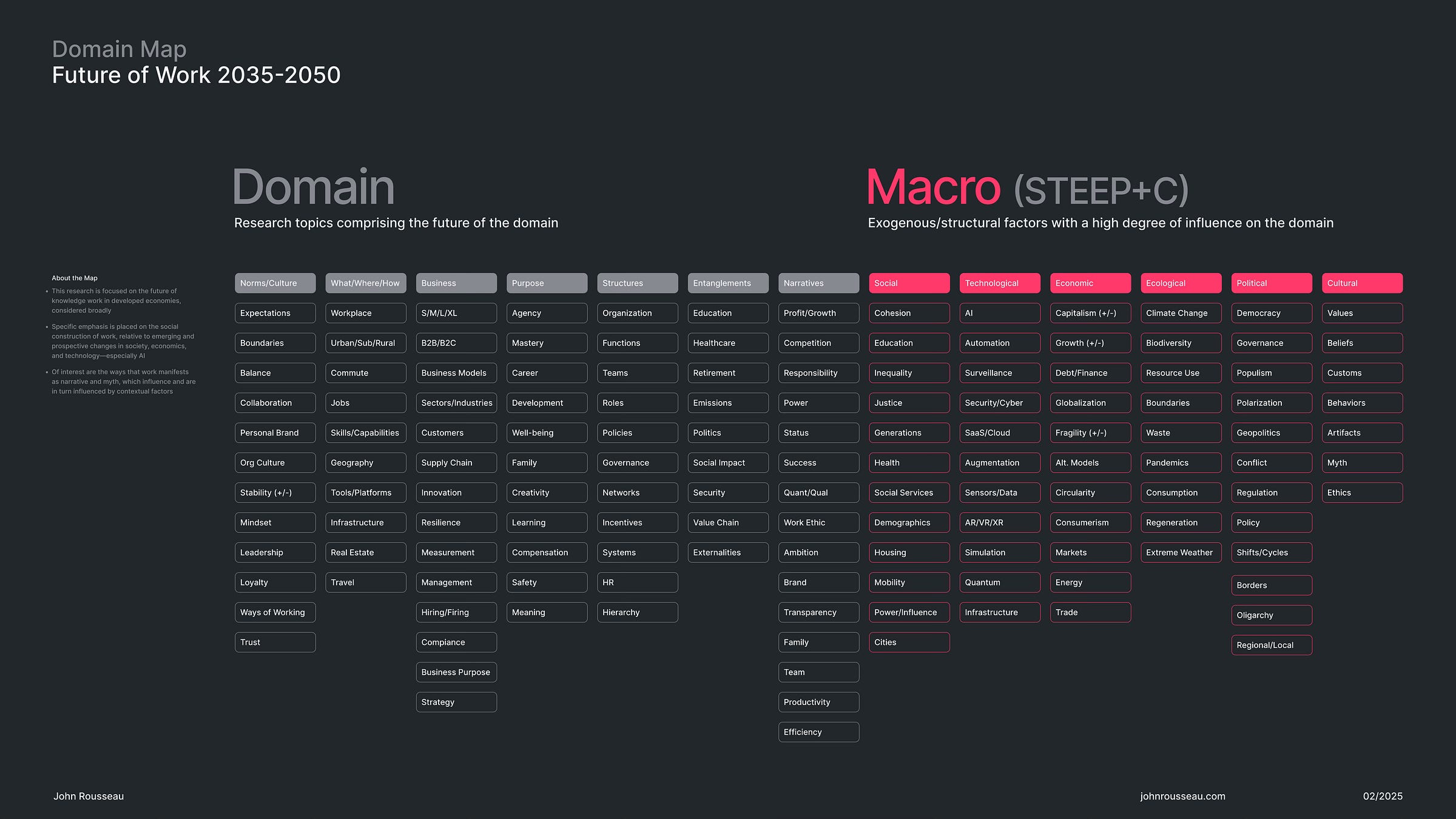

This post returns to the Future of Work. There are two topics: first, an evolved domain map that reflects my current research agenda and is an improved basis for sense-making; and next, a futures wheel that considers the implications of a future where Agentic AI replaces a large number of knowledge work roles. If the first part is too academic, feel free to skip to the second.

This work builds on previous posts that may be of interest:

Part 1: A New Domain Map

I introduced the domain map in System of Systems. To recap, it reflects the initial scope of a research effort—the themes perceived to be most relevant to the future of the domain, and thus the focus of horizon scanning activities. The map is developed via a collaborative process and facilitates shared understanding. It surfaces initial assumptions and theories of change, which tend to evolve over the course of a program along with the map itself. It isn’t a static artifact.

The tool was developed at the University of Houston as part of the Framework Foresight approach, which is where I learned it. There, the domain map is focused on a limited number of issues directly related to the topic, which helps to manage research constraints and ensure coverage of the most critical subjects. To include broader signals of change, the findings are augmented with STEEP trends that are synthesized over time across a wide variety of research programs and as a result of general scanning.

Expanding Context for Complexity

In practice, I have found it useful to modify the configuration of the map to include STEEP, rather than rely on the integration of generic trends. This follows from a belief that most foresight programs are focused on change in complex adaptive systems, and thus emerging relationships across these systems of systems are important and should be made explicit. In other words, the implications of any change are specific to the context of an interaction (e.g., an aspect of climate change likely has different implications for the future of work than the future of food). That was the premise of the previous example.

Since then, I expanded the concept to differentiate between two layers of change:

The Domain Layer: Research topics comprising the future of the domain, based on the UH approach

The Macro Layer: Exogenous and/or structural factors with a high degree of influence on the domain, structured via STEEP with the addition of “C” for culture in place of the more common and limited “V” for values

This framework widens the aperture without sacrificing relevance. It contextualizes the domain within broader structural changes while also interpreting those changes from the standpoint of the domain. In this way, it provides a richer foundation for generating hypotheses and novel theories of change across the layers.

Foresight as Sense-Making

The more you work with the map, the more new connections emerge. In this way, the expanded framework is as much a canvas for creativity as a research guide. This aligns with my preferred process—co-creation with stakeholders as part of a structured learning journey. Here’s how I might facilitate a work session at the start of a foresight program:

Suggested Facilitation:

Start with the Domain layer: generate a list of the most important topics comprising the future of the domain

Cluster and synthesize outputs to identify top-level themes and sub-topics; identify any that would better fit in Macro, and discard any that no longer fit

Zoom out and populate the Macro layer, working across the categories

Resolve gaps and redundancies; gain consensus on the draft

Discuss and capture emerging hypotheses—theories of change drawn from across the map

Identify implications for research and reframe strategic questions

About the Map

This example reflects my perspective and is not intended to be universal. I am interested in the future of knowledge work in developed economies, where the meaning and structure of work is under strain and subject to significant future disruption amidst social change and technological advancement (especially AI).

There are many overlapping issues at play. The Domain section includes the following categories specific to the focal issue:

Norms/Culture: The social norms that shape work, and the culture of work itself

What/Where/How: The infrastructures of work

Business: The role, nature, and dark matter of organizations

Purpose: The role of work for individuals in society

Structures: The means by which work is organized and managed

Entanglements: Adjacent systems embedded in or affected by work

Narratives: The myths and stories of work—both tacit and explicit

The Macro section adds contextual depth across the STEEP+C categories. Note that some topics are inherently more important than others—for example, AI is a major focus area. That said, the most impactful causal relationships occur across categories—e.g., the ways that changes related to AI influence narratives, culture, and economics, among others (and vice versa). Horizon scanning takes these overlaps into account, and seeks weak signals and interactions across systemic boundaries.

More to come as things evolve.

Part 2: Agentic AI

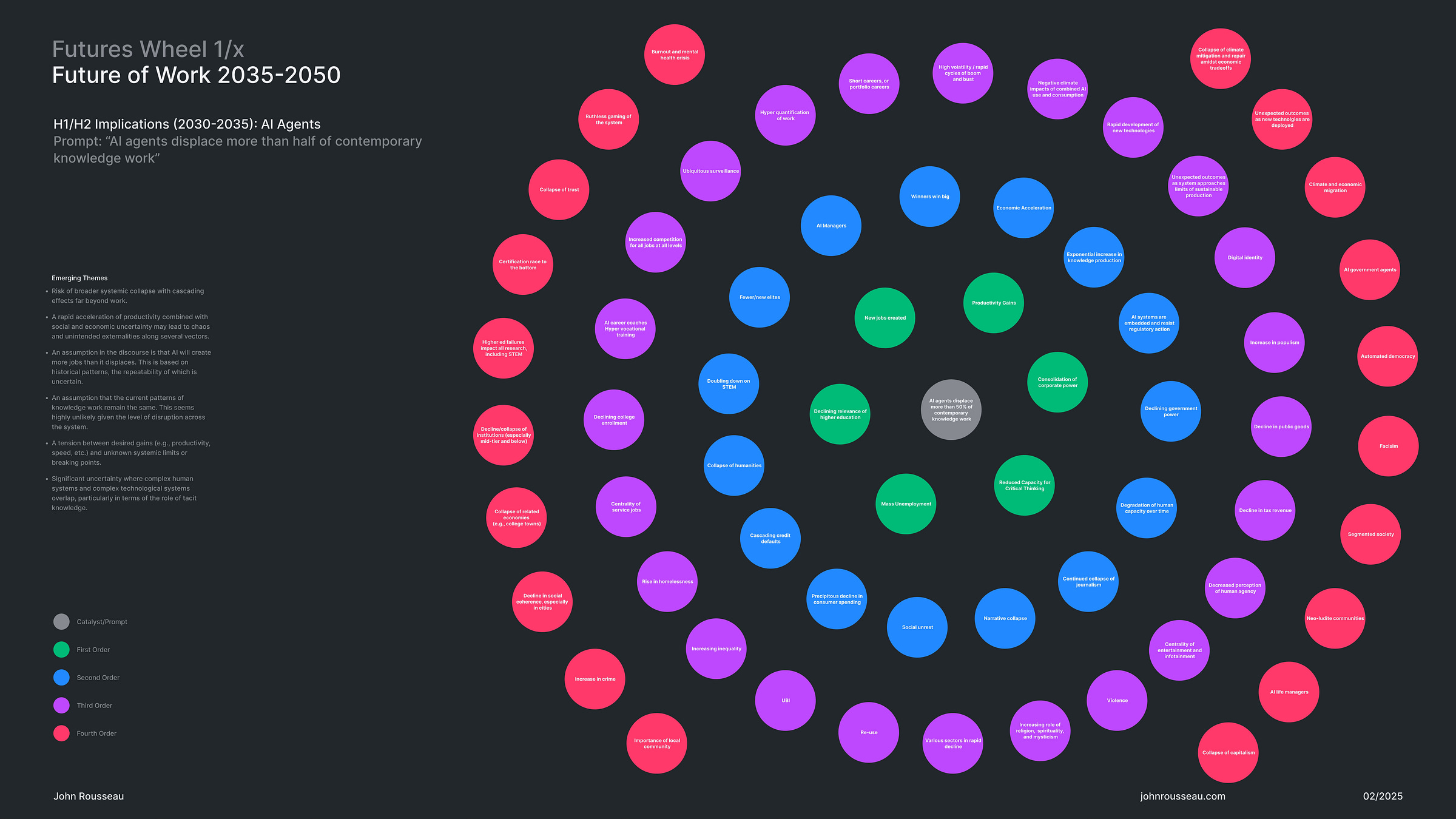

The Futures Wheel is a foresight tool designed to explore the systemic implications of a prospective change. It begins with a single prompt—in this case, “AI agents displace more than half of contemporary knowledge work” (H1/H2, 2030-2035), a signal that emerged during horizon scanning per the domain map above.

Purpose

The goal is not to evaluate the likelihood of the prompt, which is assumed for the sake of the exercise. Rather, by developing a broad array of prospective outcomes, the exercise expands our awareness of what is possible and preferable. The map is not intended as an exhaustive representation so much as a creative tool to externalize assumptions, inspire more nuanced discourse about systemic causality, and reflect on our agency to influence preferable futures. It is common to produce many of these during a foresight program or workshop.

This example was inspired by current discourse around “Agentic AI,” which runs the gamut from skepticism that advanced agents are feasible to hyperbolic predictions about the future of work. Notably, the latter tend to overlook the implications of a disruptive future, assuming that jobs will be created in equal or greater proportion than destroyed. This is—to put it mildly—a significant assumption. It is good practice to imagine both positive and negative outcomes, and I attempted to do that here.

Thus the first-order implications are:

New jobs created

Productivity gains

Consolidation of corporate power

Mass unemployment

Declining relevance of higher education

From each of these, new implications emerge. For example, productivity gains lead to economic acceleration and an exponential increase in knowledge production. Then, economic acceleration leads to increased volatility and rapid cycles of boom/bust, etc., further destabilizing the job market, and so on. Note that the working map was edited for visual clarity and to fit the slide format shown here; there are many more issues to explore.

Themes that emerged from the exercise:

Risk of broader systemic collapse with cascading effects far beyond work.

A rapid acceleration of productivity combined with social and economic uncertainty may lead to chaos and unintended externalities along several vectors.

An assumption that the current patterns of knowledge work remain the same. This seems highly unlikely given the level of disruption across the system.

A tension between desired gains (e.g., productivity, speed, etc.) and unknown systemic limits or breaking points.

Significant uncertainty where complex human systems and complex technological systems overlap, particularly in terms of the role of tacit knowledge.

Of these, the risk of systemic collapse is particularly acute, leading to negative outcomes for climate and planetary health, social coherence, and democratic governance, among others. Optimists might argue that the fifty percent number in the prompt is too high and overplays the risk, or that advances in AI might also mitigate specific harms. For them, I would ask for an alternative prompt and run the exercise again, comparing assumptions, outcomes, and theories of change across these and other examples.